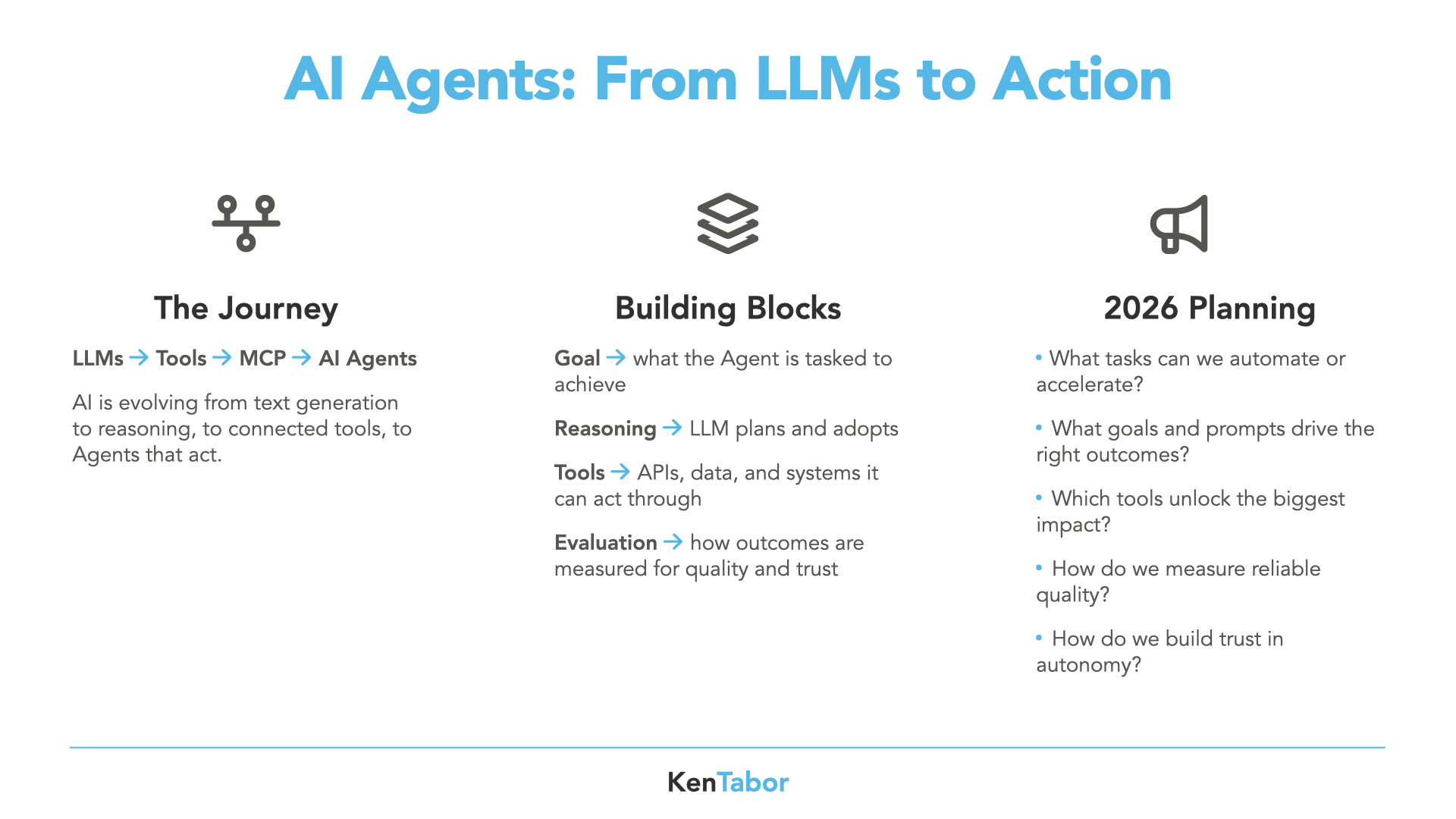

From LLMs to Agents - the Shift in AI

The Evolution of Generative AI

We’ve been practicing with Generative AI since November 2022. That’s when OpenAI introduced ChatGPT. We learned about large language models (LLM) - the tech that powers AI. Early results with ChatGPT were extraordinary! It appeared to read and write language like humans do.

Now, Generative AI has evolved.

Given a complex goal, it can break it down into subtasks. AI will plan, execute, and reflect as it works. This is reasoning, and it’s an important step forward in turning LLMs into AI Agents. We first saw the difference when OpenAI gave a preview of their o1 model in September 2024.

When LLMs Meet Tools

Modern foundational LLMs (think Google Gemini and OpenAI GPT) aren’t just next-word text predictors. They’re being built to reason about problems to complete goals. What’s the next step for software developers? Tools.

When we connect an LLM to tools - APIs, databases, email, messaging, web search, even a Python runtime - it stops being just a chat partner. It starts to act. It can sense and respond to the world. That’s the beginning of an AI Agent:

- LLM provides reasoning.

- Tools provide action.

- Together, they can plan, execute, reflect, and adapt.

This is when it gets exciting.

Instead of us telling AI exactly what to do step by step, we can give it a goal and watch it work toward it. Sometimes it’ll surprise us by planning in ways we wouldn’t have programmed ourselves.

Take a coding agent, for example. Pair an LLM with tools like:

- File system to read and write code

- Package manager (like npm or pip) to install libraries

- Runtime (Python or Node.js) to execute code

- Debugger or linter to test and correct errors

Instead of typing every line of code yourself, you can use the agent to plan, write, run, and refine a program on its own. We're well past the early days of GenAI where it felt like a Q&A system. Now it's a teammate that you lead building software at your side.

Next, I’ll introduce you to Model Context Protocol (MCP). It’s a way AI developers are providing tools for use by Agents.

Model Context Protocol: The Universal Connector

Foundational LLMs like Google Gemini and OpenAI GPT can reason. We’ve considered how tools turn them into AI Agents. Now let’s talk about the glue that connects it all: Model Context Protocol (MCP).

MCP is a new open standard, driven by Anthropic and others in the AI community. MCP defines how LLMs can safely and consistently access tools. Think of it as a universal connector between an Agent and the capabilities it needs.

We’re already seeing major tech platforms building MCP servers. Why? To intentionally expose their services like APIs, databases, messaging systems, and more, so that Agents can plug in and get to work. Examples include:

This is where AI Agents become really practical. Instead of bespoke integrations for each system, MCP provides a shared protocol for connecting reasoning (LLMs) with action (tools). That’s a big deal. We’re accelerating!

Imagine the future of AI development not just being about prompting models, but about orchestrating systems of Agents that can plan, act, and adapt with the right tools at hand. It's time to think about AI Agents actually making a difference in your work, and why now is the moment to start planning for it.

The Real Opportunity: Applying Agents in 2026

So far, I’ve written about LLMs, reasoning, and how tools turn them into AI Agents. Now it’s time to make that theory more concrete.

For product managers, application architects, software developers, and UX designers, the real opportunity is deciding where to apply AI Agents. Address the why: not just how to build them, but why they’re economically valuable.

Through user interviews and hallway chats, I’ve listened to leaders everywhere, and they’re asking the same key questions:

- “What tasks in my company should be automated or accelerated by AI Agents?”

- “What goals can I set for them, and what prompts would drive the right outcomes?”

- “Which MCP-supplied tools would unlock the biggest impact?”

- “How will I evaluate outcomes for reliable quality?”

- “How will I gain trust in allowing AI Agents to work autonomously?”

Each of you has something to say about where Agents can make a difference. With 2026 planning already underway, there’s no better time to start thinking AI-First. The roadmap you set now will shape how you build, deliver, and operate in the next few years.

Use this guide to start identifying where AI-first belongs in your roadmap.

Plan for an AI-First Future

From simply generating text to reasoning with tool use, there’s an undeniable path from LLMs, APIs, MCP, AI Agents, and application. Plan intentionally for 2026. Be ambitious. Have a strong vision for the future.

For example: a product team might identify daily tasks their customers’ employees find repetitive — the kind that feel like chores, offer no intrinsic motivation, carry little prestige, and generate no real revenue potential. Those are unmet needs, perfect for an autonomous AI Agent to take over. Build it, offer it, and you’ve just created real value.

Time is limited, but the potential is nearly limitless!