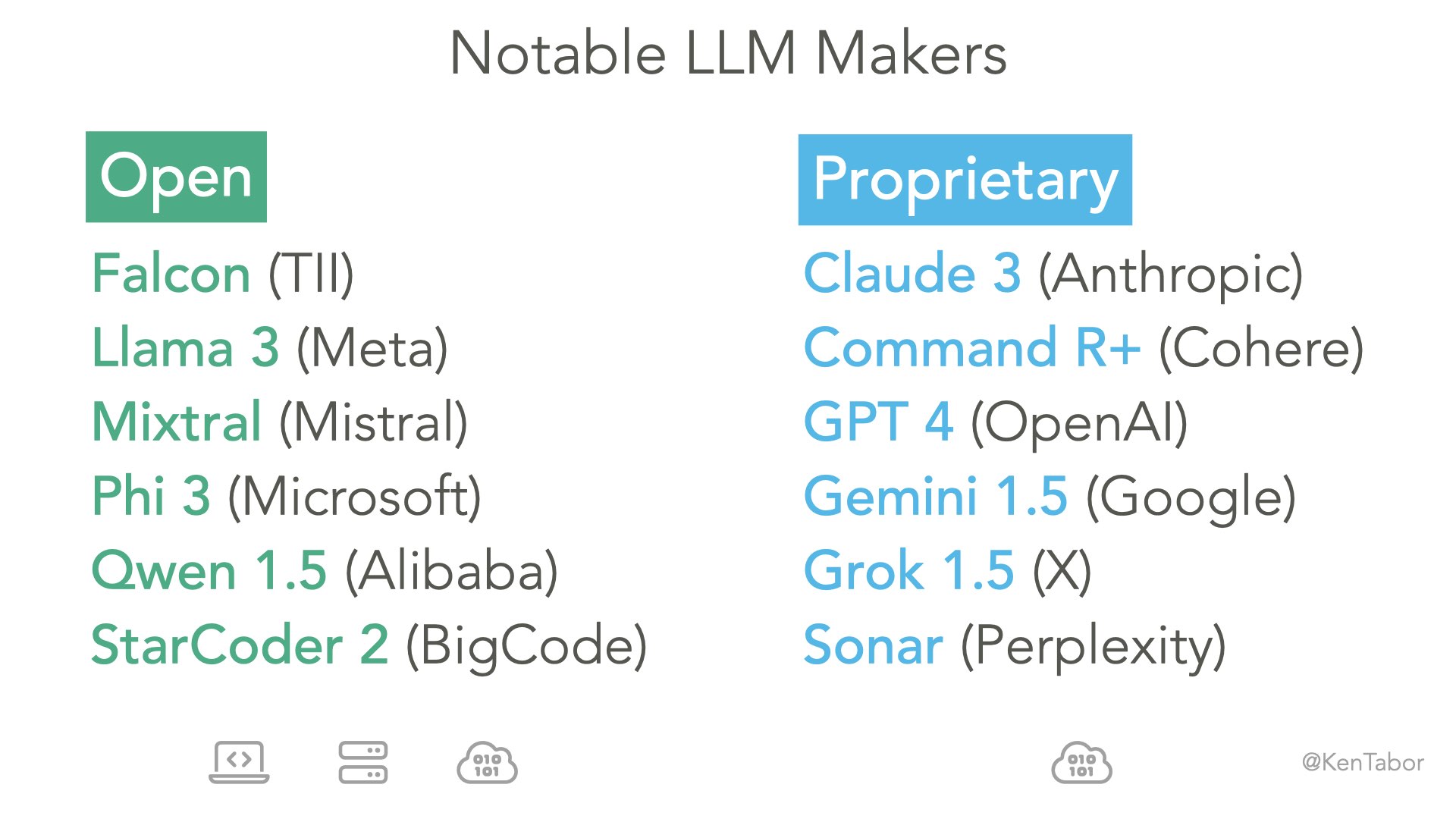

Notable Large Language Model (LLM) Providers

Navigating Through the Fast Pace of Generative AI

I took a quick minute to reflect on the notable providers of large language models (LLMs). LLMs are the key technology behind Generative AI.

Things move quickly. Version releases are turning over rapidly. New entrants are appearing monthly. Some seem to be holding back. One company was acquihired. It’s worth collecting a list of names, versions, and makers to know what our options are.

Open Source Solutions in AI

Open Source (OS) implies you can download an LLM from somewhere and use it as you like. Hugging Face is a common spot. It’s the popular online community formed of data science, artificial intelligence, and software development practitioners.

OS LLMs allow you to run them on your local machine, install in a private data center, or host in a hyperscaler. Hyperscalers include AWS, GCP, Azure, or one of the emerging super-focused AI cloud startups.

Presumably, running an OS LLM locally is very cool and creates low-cost R&D opportunities. It's rather limited to a select audience. I'm thinking AI researchers, software developers, and hard-core enthusiasts.

Software listed below makes running local LLMs surprisingly easy:

Exclusive Access: Proprietary Language Models

Proprietary LLMs are accessed through a hosted platform of their own making, or in partnership with a hyperscaler.

Programmatic access is through a REST/JSON API. Those are

supported by just about any modern language offering in an HTTP module. Language

specific SDKs are convenient accelerators. Python and NodeJS being most common.

Some cloud providers are creating abstraction layers to improve developer experience. It's a customer acquisition play bringing extreme ease-of-use for AI integration. Not only shortened integration time, but with the added benefit enabling "cheap" LLM switching by its customers.

Specifically, I read AWS Bedrock has an SDK layer drawing out commonalities among their hosted third-party LLMs. Makes our app integration layer less complex. Also implies swapping out LLMs is as simple as a config change.

Software developers should experiment with multiple LLMs. Don't get locked into a single vendor. Discover which integration works best for an application. Demonstrate your hypotheses. Develop the final solution. Deliver into production. Win!

Your Thoughts on AI?

Consumers are benefiting from the massive investment big AI R&D labs are making.

Some will observe the growing competition between LLM makers is a warning sign. Hard to imagine advanced tech like this could become another commodity item, but undifferentiated oversupply could lead to that condition.

If you have a modern MacBook with Apple Silicon, or a Win/PC with an Nvidia graphics card, you can install one of the many apps I mentioned above. Download an open source LLM and you have an AI of you own to work with. Free and clear, really.

We’re early in the age of GenAI. Choices are exciting, and sometimes confusing. That’s the joy of tech!

Reach out to me on X/Twitter or on LinkedIn, and let me know of your success. Let’s do something awesome together!