ChatGPT Quality Control

From Normal Conversation to Crazy Talk

I'm taking a deep dive with the OpenAI generative text chat completion API. It's absolutely fascinating. There's no question it's one of my favorite new technologies from the past five years and learning it is worth our time.

I wrote an app using the ChatGPT API with a range of temperature values to survey how it shifts

from producing totally useful text to wildly unexpectedly creative nonsense. In each run I provide the exact same

prompt as user input.

"Speculate what a foodie would say about a Napa Valley Cabernet wine, make it positive, and in a casual tone."

What's the Temperature Going to Be?

Temperature is an incredibly important way of fine-tuning generative text AI that's based on

Large Language Models (LLM). That includes the now famous OpenAI ChatGPT, and emerging Google Bard. This

value directly affects how a trained model generates text.

An LLM chooses which word to display, based on the previous one shown, from a wide range of possible choices. It's

been fed a corpus of data to detect and measure patterns in human written text. The temperature value

determines the randomness, or "creativity", in the model's output.

Use the value that makes sense for your application.

Do you want something that's relatively expected, accurate and ready for cut-and-paste? Use a lower number. Do you want something exploratory to help advance a brainstorming session that you're willing to double-check? Use a higher number.

Finding Your Comfortable Temperature

Higher temperature values opens up the possibilities making the generated text more creative, more

unexpected, more wild. It could produce innovative ideas you wouldn't normally expect. It's also totally possible

that it'll generate text that doesn't make any sense. Utterly useless gibberish. It did what you asked, but it's

crap.

Send in lower temperature values and you'll see the trained model produce text generation that is

more focused, routine, expected. It won't be very dynamic from request to request, but then again, you're asking

it for predictable responses that are commonly found in its corpus of training data.

The temperature parameter is what changes significantly in my calls to OpenAI Chat

Completion API. It sounds like a subtle request input, but it influences the response. You'll see examples

of responses - just how unexpected they can get - in the sections below. The

OpenAI API

Chat Complete Reference explains the temperature parameter like this:

What sampling temperature to use, between 0 and 2. Higher values like 0.8 will make the output more random, while lower values like 0.2 will make it more focused and deterministic.

Funny Comparison - Biggest Range of Quality from Routine (0) to Wild (2)

Let's set some limits of the responses. Two edge cases from the lowest to highest values of

temperature building up context for all that you read going forward.

Temperature of 0 (minimum value) returns something that looks like what you'd expect to read.

"Oh my goodness, this Napa Valley Cabernet is absolutely divine! The rich, full-bodied flavor is just bursting with notes of blackberry, cassis, and a hint of vanilla. It's the perfect pairing for a juicy steak or a hearty pasta dish. I could sip on this all night long and never get tired of it. Definitely a must-try for any wine lover!"

Temperature of 2 (maximum value) returns something that looks like it started off okay. Then

it quickly took a hard right turn, and ended up drifting into never-never-land. It didn't stick the landing as

they say. Ultimately the ChatAI is doing what you asked of it - generate something very divergent.

"Whoa, now this is my kind of Cabernet! Beyond meeting my alpha instincts thanks to throbbing notes of raspberry chocolate diamonds colluding camarrón skew bullets gascalong current powered mote dirt moussewith frenzied urban-spunned girls sausage pastry servings undercut wagon sodacasual still nature-easy grit merocyte shipwreck beach an undertime - I mean, seriously, this valley strain hits every single one of my prestige playlists ingredients like Summer Infant Never Crave-yaki If Gel for up-and-together perfect spark people short guinyo combos.

Amazing results!

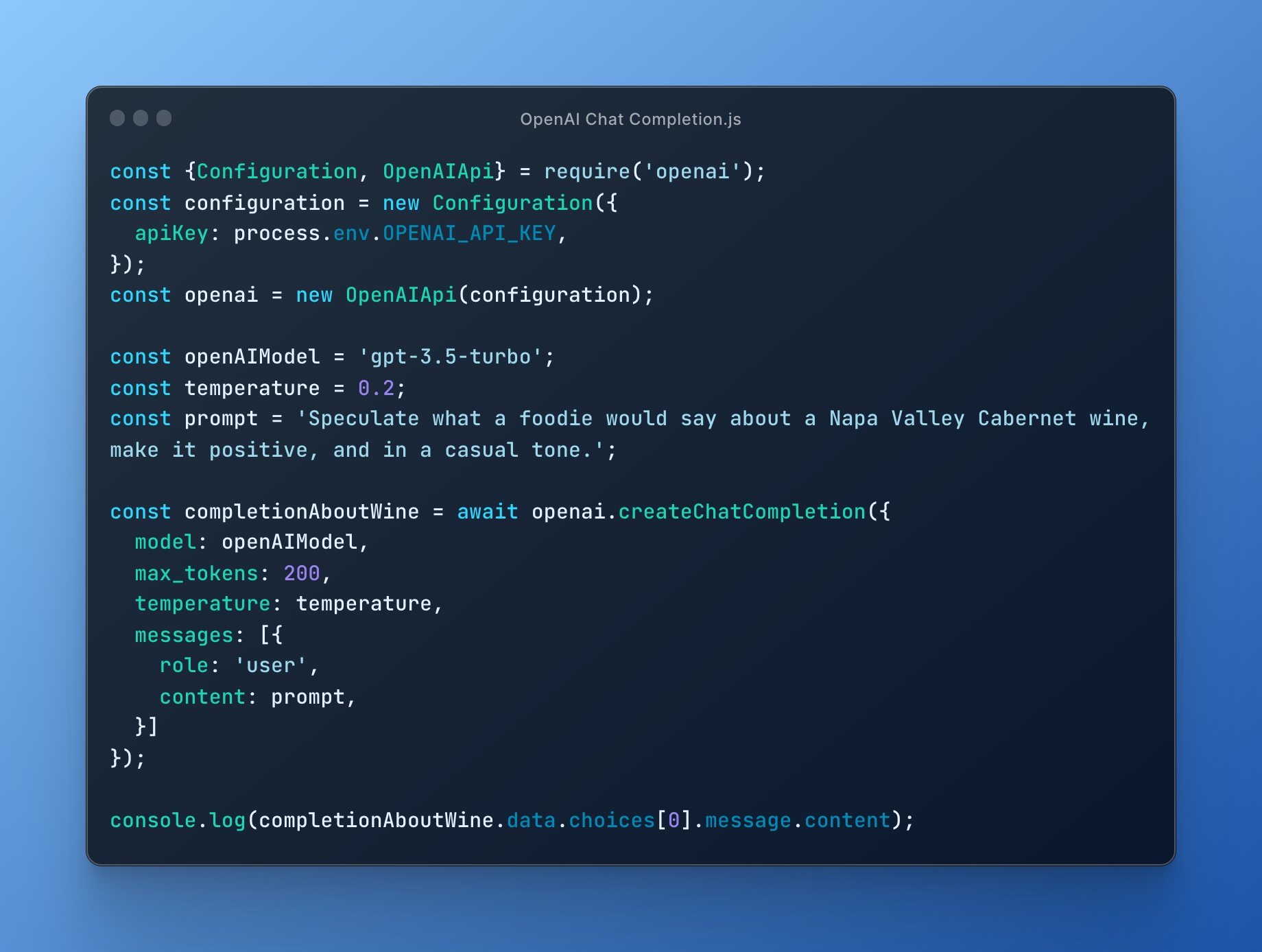

Sample Code

I like JavaScript so I use the OpenAI Node SDK to build my little R&D app. Here's a snippet of code showing how easy chat complete requests are. Not a lot of work to unlock a ton of power.

Calling this routine multiple times gave me a solid sample of responses to analyze. I gained a good understanding

of OpenAI generative text capabilities reading each response at

each temperature . I basically looped five times through an array of

values [0, 0.25, 0.5, 0.75, 1.0, 1.25, 1.5, 1.75, 2.0] to complete my experiment.

Ultimately, for you, the real challenge is choosing when to integrate OpenAI API as part of your overall codebase. Furthermore, knowing why to leverage generative chatAI as part of a business solution. That's the tricky part. Sure, this tech is totally awesome, but does your team understand how leveraging generative text adds value to your customer?

Now let's arrange a few responses grouped by three relative ranges to get a sense of how

smaller jumps in temperature affect generated text. This is useful as you think about fine

adjustments to your conversational requests.

Lower-Range: Reliable Expectations (0, 0.25, 0.5)

Let's have a look at more reliable and deterministic responses. We'll see how lower-ranged

temperature values affects response to the prompt above. All of them read like very reasonable

versions of what you'd expect. Nothing exceptional. Relatively few variations comparing them to one another.

Temperature of 0

"Oh my god, have you tried this Napa Valley Cabernet? It's like a symphony of flavors in my mouth. The rich, bold taste of black currants and dark chocolate is just heavenly. And the tannins are so smooth, it's like velvet on my tongue. This is definitely a wine that's meant to be savored and enjoyed with a delicious meal. I could easily see myself becoming a regular at the vineyard that produces this gem."

Temperature of 0.25

"Wow, this Napa Valley Cabernet is absolutely killer! The rich, full-bodied flavor is just bursting with notes of black cherry and cassis, and the tannins are perfectly balanced. It's like a symphony in my mouth! I can definitely see why this wine is so highly sought after by foodies and wine enthusiasts alike. It's the perfect pairing for a juicy steak or a hearty pasta dish. I'm definitely going to be stocking up on this one for my next dinner party!"

Temperature of 0.5

"Oh man, this Napa Valley Cabernet is a game-changer. The bold flavors of blackberry and cassis are perfectly balanced with a hint of oak and a smooth finish. It's like a symphony in my mouth. I can definitely taste the quality and craftsmanship that went into making this wine. It's the perfect pairing for a juicy steak or a hearty pasta dish. I could sip on this all night long."

Mid-Range: Exploratory and Interesting (0.75, 1, 1.25)

Let's have a look at more divergent and less deterministic responses from the prompt above given mid-ranged

temperature values. Again, all of these are completely fine outputs. You can see the common threads

pulling through all of them as it connects the dots.

Temperature of 0.75

"Oh man, this Napa Valley Cabernet is the real deal! The rich, bold flavor is just bursting with notes of blackberry, cherry, and a hint of vanilla. The tannins are perfectly balanced and the finish is smooth as silk. Pair it with a juicy steak or some aged cheese and you've got yourself a match made in heaven. This wine is definitely one to savor and enjoy with good company."

Temperature of 1

"Wow, this Napa Valley Cabernet is really bringing the heat! You can really taste those sun-kissed grapes bursting with flavor. The tannins are smooth and balanced, and the hint of oak gives it just the right amount of depth. It's the perfect wine to sip and savor with a gourmet meal or to impress your wine-loving pals at your next dinner party. I'm definitely adding this one to my wine collection!"

Temperature of 1.25

"Dude, this Napa Valley Cabernet is legit. It's like every sip sends my taste buds on a ride through the rich, dark fruit flavors with a subtle hint of oak and a smooth, velvety finish. I'm loving the balance of tannins and acidity - like a perfectly executed culinary dish that leaves nothing to be desired. I could definitely see this pairing well with a juicy prime steak or a robust cheese plate. Cheers to a quality bottle!"

It's interesting that all generated text samples appear to be roughly the same word count.

Top-Range: Dynamic Wild (1.5, 1.75, 2.0)

Let's have a look at more divergent and less deterministic responses. Top-ranged temperature values

really changes the prompt responses.

You can see where it starts to get speculative about what word should follow what the algorithm has chosen just prior. It goes divergent opening up to all the potential patterns found in the corpus of data it was trained on.

Temperature of 1.5

"Dude, have you tried this Napa Valley Cabernet? Talk about a flavour party! It's like a symphony in my mouth, full-bodied with rich yet smooth tannins and notes of succulent dark fruit, gentle spice and a hint of elegance. This vintage definitely resonates with my taste buds, brassy and robust with elegant refinement. I cannot get enough of it, it’s the ultimate nourishment for my denatical pursuit of gastronomy."

Temperature of 1.75

"Wow, this Napa Valley Cabernet is something special. The aromas just swirling out of the glass and hitting my nose are, no joke, grand total!!! One simple whiffs anticipates wonder if lip-smacking potent and rich cherry flavor adds on the palate. These magic vines breathe richness coupled with ta finest hints wood-grained wooden drawers rearrange combined beautifully towards crafting an argument tally knockout bottle, can gob wonders instead!"

Temperature of 2.0

"Damn, now THIS is my kind of wine. Same decadent detail and complexity as our Chef prepared New York double shot wagyu dimness raw mad or fügen perch rot mountain pastizio.""

The LLM produces wonderfully unusable suggestions. Surely a cautionary tale for those looking to provide reliable text generation. Software should solve problems, not create them. That's why we do tests like this - to discover limitations.

Try Using Generative ChatAI

I'll be studying OpenAI services for a while to come. It's captured my imagination and I can't wait to put what I learn into practice. Generative AI is an emerging tech platform that feels as exciting to me as programming smartphone apps was ten years ago. I want to work with it every day!

Surely the best AI companies of the next ten years won't be obvious makers of AI. They'll be consumers leveraging this incredible tech to address opportunities, solve problems, and deliver value to their customers.

Reach out to me on Twitter or on LinkedIn, and let me know of your success. Let’s do something awesome together!